04 Feb 2026

I presented our work on Tracing Phonetic Features in Automatic Speech Recognition Models

in the joint colloqium of the phonetics and corpus linguistics groups at Humboldt University of Berlin.

Thank you for hosting me, Tine Mooshammer, Malte Belz,

and Sarah Wesolek!

The talk was based on our Interspeech 2024 paper, where we used ASR to examine coarticulation, and our recent Computer, Speech & Language paper, where we investigated how ASR models handle locally-perturbed speech signals. 👀

If you are planning a trip to Berlin one of these days, there surely is no shortage of activites.

I can recommend booking a free tour of the Reichstag for a behind-the-scenes

perspective on German politics 🏛, taking the bus to Dahlem to enjoy expressionist art at

the Brücke Museum 🎨, and strolling through the city with open eyes to discover gems like the Yellow Man mural by Brasilian street artists Os Gêmeos. 💚 💛 💙

11 Dec 2025

Under the motto Towards the New Era of Speech Understanding, this year’s

IEEE Automatic Speech Recognition and Understanding (ASRU) Workshop brought researchers together in Honolulu, Hawaiʻi.

🏝️

Together with Suyoun Kim (Amazon, USA), we had the exciting role of Student and Volunteer Chairs for the workshop.

We had the pleasure of working with a group of highly motivated students from the University of Hawaiʻi at Mānoa, who helped us make sure the workshop ran seamlessly.

On top of that, we organized a mentoring program, bringing together early-career researchers and experienced mentors. Mentees shared that the meet-ups gave them valuable clarity on research directions and career paths, while mentors enjoyed fresh perspectives from the next generation of researchers. Some impressions from these meet-ups are shared below.

It was fantastic working alongside the tireless General Chairs, Bowon Lee (Inha University, Korea),

Kyu Han (Oracle, USA), and Chanwoo Kim (Korea University), whose dedication made the conference a success! Mahalo! 💛 💛 💛

09 Dec 2025

The 49th Austrian Linguistics Conference took place at the University of Klagenfurt and featured

the phonetics workshop „Laute von allen Seiten: Phonetik und ihre Teilbereiche als transdiszplinäres Interessensfeld“

where we presented and discussed our work-in-progress on question intonation in Bulgarian Judeo-Spanish.

We thank the organizers Nathalie Elsässer, Hendrik Behrens-Zemek, Jan Luttenberger (ISF Austrian Academy of Sciences), Dragana Rakocevic (University of Graz), and Lukas Nemestothy (University of Vienna) for putting together a highly varied program and for fostering a very pleasant atmosphere of exchange.

I would like to highlight the plenary talk by Brigitta Busch (University of Vienna) entitled Hinhören! Poslušajmo. Slowenisch sprechen in Kärnten. Govoriti slovensko na Koroškem, which discussed the historically multilingual region of Kärnten/Koroška.

The talk explained how confronting suppressed history and focusing on people’s lived emotional experiences of language can support a new and more open process of reclaiming Slovene in the region today.

20 Oct 2025

Most voice assistants still sound female – even when designed to be neutral? 🤔

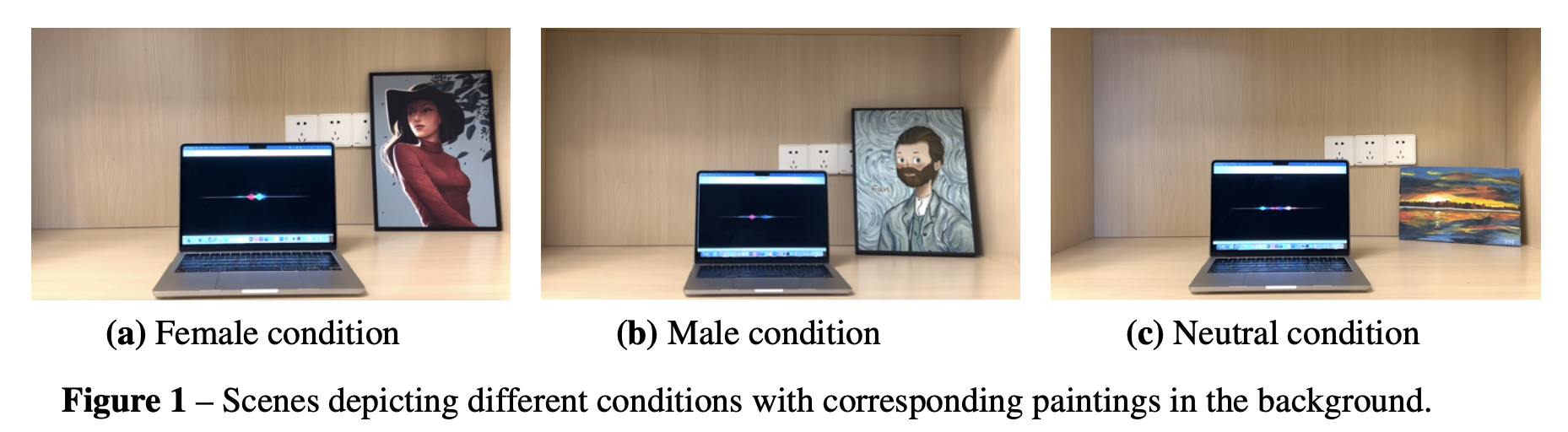

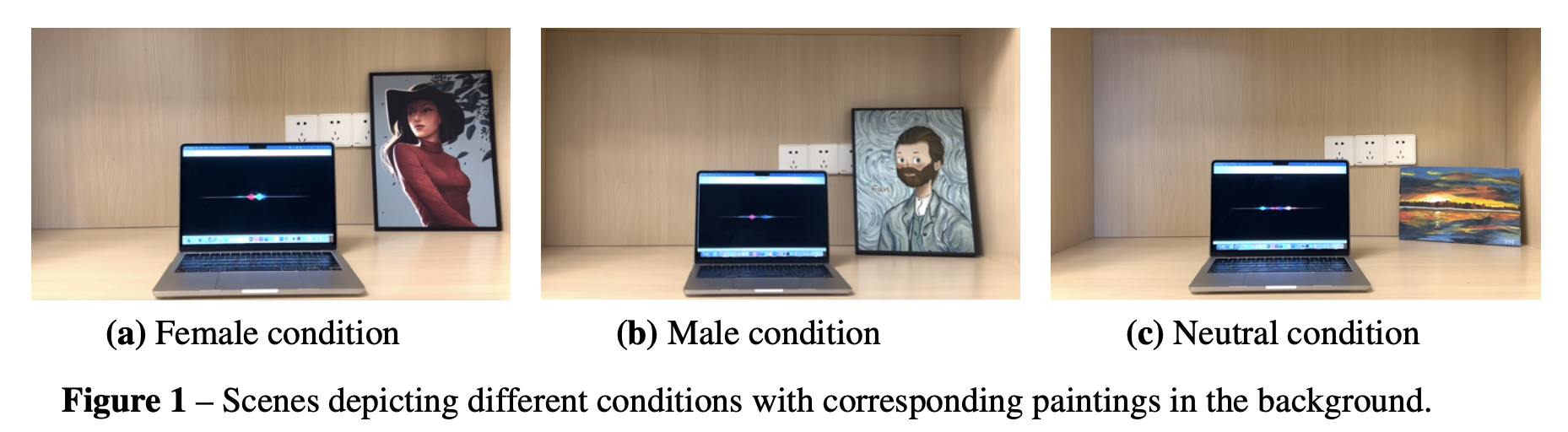

In our new study, The Influence of Visual Context on the Perception of Voice Assistant Gender, we explored how people perceive Apple Siri’s gender-neutral voice Quinn.

We found that listeners tended to rate Quinn as more female-sounding – especially when a female portrait was shown at the same time (see Figure 1a). This confirms that what we see 👀 can strongly influence what we hear 👂, even if it is unrelated to the task at hand.

Designing truly gender-neutral voice assistants isn’t just about the sound itself — our expectations and the visual context play a powerful role too.

We had the pleasure of presenting this work at P&P 2024 in Halle, Germany. Read the full paper in the proceedings (pp. 55-63). #openaccess 🔓

08 Oct 2025

This year’s P&P conference took place at the beautiful Leipzig University Library, Bibliotheca Albertina.

A great opportunity to discuss our work on Question Intonation in Bilingual Speakers of Bulgarian and Judeo-Spanish

with the vibrant community of phoneticians and phonologists from the DACH+ region.